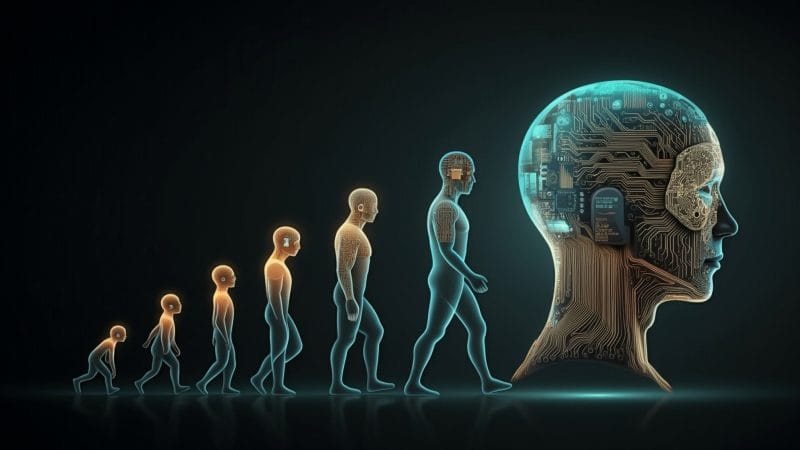

There was a time when debates about determinism and free will belonged to philosophy departments and late-night dorm room conversations. They were enjoyable precisely because they seemed harmless. Whatever the answer, life went on. Courts judged, doctors decided, teachers taught, and politicians were still—at least nominally—held responsible for their actions. That era is over.

Artificial intelligence has transformed what once appeared to be an abstract philosophical question into a concrete issue of governance, power, and accountability. Determinism is no longer merely a theory about how the universe works. It is becoming an operating principle for modern institutions. And that changes everything.

AI systems are deterministic by construction. They operate through statistical inference, optimization, and probability. Even when their outputs surprise us, they remain bound by mathematical constraints. Nothing in these systems resembles judgment, interpretation, or understanding in the human sense.

AI does not deliberate.

It does not reflect.

It does not bear responsibility for outcomes.

Yet increasingly, its outputs are treated not as tools, but as decisions. This is the quiet revolution of our time.

The appeal is obvious. Institutions have always struggled with human variability. People are inconsistent, emotional, slow, and sometimes disobedient. Bureaucracies prefer predictability, and algorithms promise exactly that: standardized decisions at scale, immune to fatigue and dissent.

In healthcare, algorithms promise more efficient triage. In finance, better risk assessment. In education, objective evaluation. In public policy, “evidence-based” governance. In content moderation, neutrality. Who could object to systems that claim to remove bias and optimize outcomes? But beneath this promise lies a fundamental confusion.

Prediction is not judgment.

Optimization is not wisdom.

Consistency is not legitimacy.

Human decision-making has never been purely computational. It is interpretive by nature. People weigh context, meaning, consequence, and moral intuition. They draw on memory, experience, and a sense—however imperfect—of responsibility for what follows. This is precisely what institutions find inconvenient.

Human judgment introduces friction. It requires explanation. It exposes decision-makers to blame. Deterministic systems, by contrast, offer something far more attractive: decisions without decision-makers.

When an algorithm denies a loan, flags a citizen, deprioritizes a patient, or suppresses speech, no one appears responsible. The system did it. The data spoke. The model decided.

Determinism becomes a bureaucratic alibi.

Technology has always shaped institutions, but until recently it mostly extended human agency. Calculators assisted reasoning. Spreadsheets clarified trade-offs. Even early software left humans visibly in control. AI changes that relationship.

Systems designed to predict are now positioned to decide. Probabilities harden into policies. Risk scores become verdicts. Recommendations quietly turn into mandates. Once embedded, these systems are difficult to challenge. After all, who argues with “The science?”

This is why the old philosophical debate has become urgent.

Classical determinism was a claim about causality: given enough information, the future could be predicted. Today, determinism is turning into a governance philosophy. If outcomes can be predicted well enough, institutions ask, why allow discretion at all?

Non-determinism is often caricatured as chaos. But properly understood, it is neither randomness nor irrationality. It is the space where interpretation occurs, where values are weighed, and where responsibility attaches to a person rather than a process.

Remove that space, and decision-making does not become more rational. It becomes unaccountable.

The real danger of AI is not runaway intelligence or sentient machines. It is the slow erosion of human responsibility under the banner of efficiency.

The defining conflict of the 21st century will not be between humans and machines. It will be between two visions of intelligence: deterministic optimization versus meaning-making under uncertainty.

One is scalable.

The other is accountable.

Artificial intelligence forces us to decide which one governs our lives.

Join the conversation:

Published under a Creative Commons Attribution 4.0 International License

For reprints, please set the canonical link back to the original Brownstone Institute Article and Author.